Caveat: The following blog is about a straightforward project; however, what's important isn't the technology. It's the process of understanding requirements and delivering to meet business demands.

Welcome to my third blog in this series where I'll be covering the topic of Infrastructure-as-Code (IaC). The rise of IaC is in most part thanks to Public Cloud due to a shift to Infrastructure-as-a-Service (IaaS). Being able to create and deploy entire environments containing thousands of components is the utopia that most strive towards; however, Rome wasn't built in a day.

Start small, learn lessons and pivot.

I always recommend that to do IaC well it involves a journey, a journey where making a bad decision is not necessarily the wrong decision. Let me elaborate.

Step 1) Avoid on day one to architect and build the ultimate solution, instead, look to be able to deploy a single application in an environment. Why? Because there are benefits and challenges with IaC and as you adopt it for the first time you need to experience both of these to get a fuller understanding of the capability.

Step 2) After building out your first couple of applications you'll experience a duplication of code (this won't be the first, nor the last time you'll experience this pain). So the next step is to look at modularising your code into reusable chunks. In my example below I'm using Terraform (a Hashicorp product) which has the capability of writing modules, an excellent example of this is to create a module that builds an AWS EC2/GCP VM which may include sub-dependencies like additional block storage. You should aim to be able to consume the module where it will produce a vanilla instance without passing any arguments.

Step 3) The next hurdle you'll need to overcome will be creating a Route-to-Live (RTL). In the native open-source Terraform, this can materialise as a folder level duplication unless you consume third-party plugins like TerraGrunt. Hashicorp has another solution called Terraform Enterprise (TfE) which is engineered for organisations with multiple projects and multiple environments. Of course, you can develop your own solution to work around this challenge; however, remember self-developed solutions don't come free either (time to build/support/onboarding).

Step 4) So now that you have automated infrastructure builds across environments the next challenge to face is a supporting CI/CD pipeline where you can integrate applications, infrastructure builds and configuration management. At this point, you're getting reasonably mature; however by embracing the previous lessons across your team whatever this step solution looks like you will have the capability to build, run and maintain the IaC elements. There are hundreds, if not thousands, of solutions that can be 'cobbled' together but remember to stick to requirements and focus on the outcome you are trying to achieve.

State

One of the most significant benefits of a good IaC tool is managing state. So what is 'state'? Simply state means that the IaC will record the configuration of each of the components it deployed. If weeks later you re-ran the same playbook it will do a real-time check against the current setup versus the state it had stored from the previous run. This delta is presented and will request if you want to re-deploy the configuration that was defined in codebase back to the environment. Why is this a good thing? One behaviour we need to discourage is manual change. By stepping away from manual actions, you can confidently redeploy an application to a known point (like in the event of a DR scenario).

Ansible

Previously I've used Ansible to create and manage infrastructure (which it is capable of doing natively), however, with large multi-project environments each with dependencies on shared services or core infrastructure you recognise that this tool is not the best strategic option. Ansible is a fantastic configuration management tool, but handling infrastructure state is not its forte.

My Solution

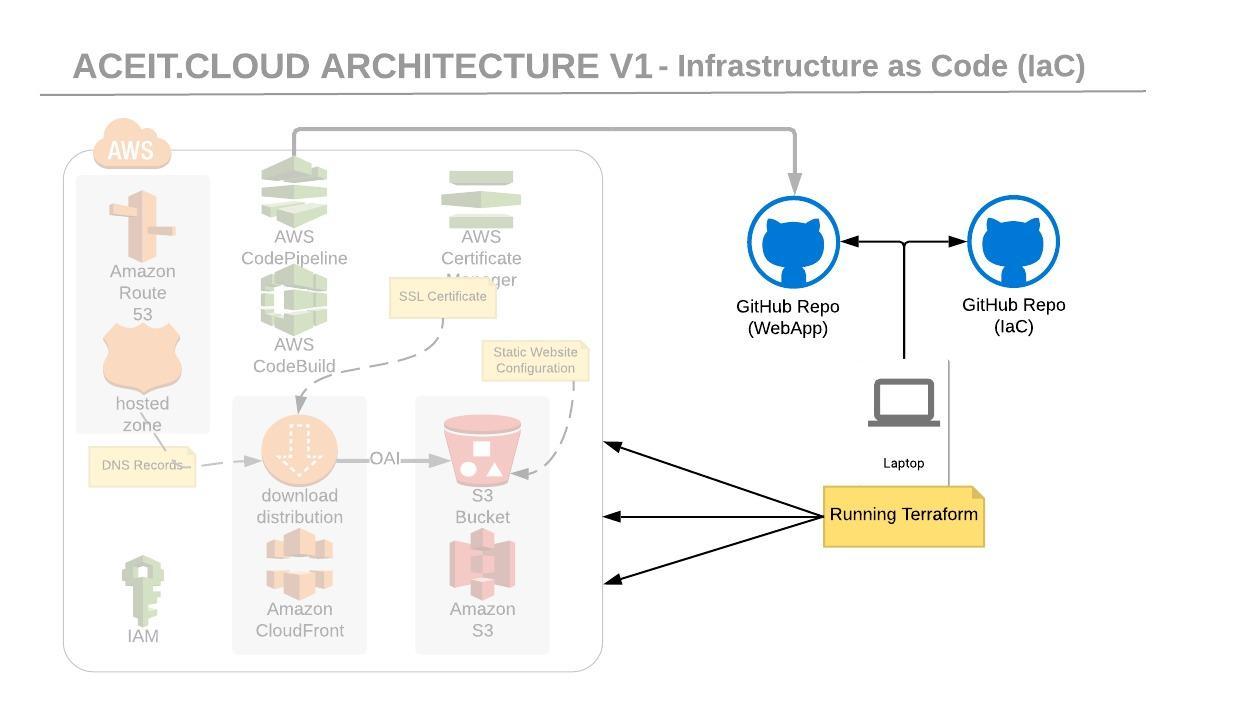

I'm using Open Source Terraform, and my current deployment mimics the pattern described in step one. I've had the benefit of learning from the previously mentioned steps however in my solution, I have a single project and currently one environment; therefore I do not need modules or complex pipelines (remember, keep it simple).

Why am I not using CloudFormation in AWS?

CloudFormation is a fantastic tool and is regularly updated by AWS. I've made a personal preference for selecting Terraform based on my experience of both of these products. A common argument is that Terraform supports multi-cloud however in the low likelihood of switching cloud providers that even with Terraform you need to rewrite your code to deploy different infrastructure components (code to create a VM in GCP is not the same as the code used to create a VM in AWS). However, if you are looking to consume a multi-cloud strategy reducing your technology footprint is a wise decision to make.

Living up to #SharingisCaring

I am also sharing a copy of my Terraform used to deploy what I've discussed in the previous two parts of this blog. I will add a caveat that as this blog series continues, there will be changes made to the code especially when we move on to discuss security and follow least privilege access.

Prerequisites

To make this work for you, you'll need to have:

- Terraform installed

- A GitHub Repo setup with supporting access for CodePipeline (in your GitHub repo go to Settings -> DeveloperSettings -> Personal access Tokens to generate a new token). The credentials are being stored in the terraform.tfvars.

- a domain managed in AWS; otherwise, you won't be able to validate the ACM cert or create DNS entries.

- AWS CLI installed with valid AWS IAM Keys (stored in ~/aws/credentials matching the Terraform provider profile name).

Repository: https://github.com/colin-lyman/aceit-terraform-sample

Important: do not commit your terraform.tfvars to a repository this is designed to be stored locally only. I've included a sample config for demonstrative purposes. There are additional changes we could do to improve this code including extrapolating more parameters to the variables file.

Assuming you have met the prerequisites above run 'terraform init' on the local folder where it will pull down the provider codebase (providers are Terraform Modules used to support third-party integrations like AWS, GCP and Azure). Next, configure the variables in terraform.tfvars and run 'make plan'. Natively you'd run 'terraform plan', however, the make file is a shortcut method of including the terraform.tfvars file each time. Once run you'll be presented with a list of resources that it wants to create. You can also run 'make' where it will run the plan and apply commands and ask you to confirm you want to want to deploy the resources.

Hopefully, this has been a useful starting point in understanding IaC. There are plenty of free learning materials and communities specialising in each technology.

If you want some help in adopting the cloud, feel free to reach out to me using the contact details below.